Web Responders

Table of Contents

Billable Support Feature Web Responders What are Web Responders? Supported Verbs 📘 📘Billable Support Feature

If required, Professional Services are available based on an accepted quote and availability of resources.

Web Responders

What are Web Responders?

Web Responders (To Web) is an available responder application. It can be invoked for both incoming and outgoing calls.

The process generally looks like this: (1) contact support to create a dial translation in the Core server and then (2) use or host code that will respond and drive the call.

Things to Remember

Remember the following concepts when working with Web Responders:

- In Dial Translations, the Responder Application is called To-Web. The To-Web application first takes a single parameter: the URL of the Web Responder application. When the To-Web application is invoked, IVR control is passed to the Web Responder application at the given URL.

- Whether the call is being forwarded on-net or off-net, you need to supply the “uid,” which is the “owner” of the call. Optionally, you can also supply both “origination” and “destination," which are also passed through the dial plan.

- For all Web Responder verbs to work, we recommend using the logic "forward to user" rather than "forward to web responder". The call flow should be routed through a user or system user. You can direct inbound calls to a Web Responder application by routing them to a system user and then forwarding them to a dial plan entry that selects the "To Web" application. You can place an outbound call by dispatching an API call that will connect the remote end with that Web Responder, like so (the user in this example is "999@domain"):

Example Scenario

This is an example of a "math" application. The application prompts the caller for two numbers and says the sum. The application demonstrates (a) a multi-step application and (b) storing state in a server-side php session. For this application to function, you must also create a file in http://localhost/tts.php and set up an account with a TTS service.

URL Parameters Provided

When the Core Module posts back to the Web Responder application, the URL may contain the following parameters (regardless if it is inbound or outbound):

| URL Parameter | Example |

|---|---|

| NmsAni | caller (e.g. "1001") |

| NmsDnis | callee (e.g. "2125551212") |

| AccountUser | the account user |

| AccountDomain | the account domain |

| AccountLastDial | last dialled digits by the account user |

| Digits | received digits |

| OrigCallID | call ID of the Orig leg |

| TermCallID | call ID of the Term leg |

| ToUser | the user input to the responder |

| ToDomain | the domain input to the responder |

Verbs

Verbs usually take an "action" attribute. If the "action" attribute is present, then control is returned to the URL given by the "action" attribute. If not present, then the application ends.

Forward

The <Forward> action forwards to the given destination. The <Forward> verb does NOT take an "action" parameter. It is effectively a "goto".

| Forward Attribute | Description |

|---|---|

| ByCaller | Specifies using the Caller's Dial Plan (instead of the Callee's) in the Web Responder script. Without this attribute, the inbound call has to route through a user first to use their dial plan, and the destination isn't as expected. |

Here are ByCaller instructions:

- Add the "ByCaller" attribute to Forward.

root@[redacted]:/var/www/html# cat forward.php

<Forward ByCaller='yes'>+141625[redacted]</Forward>

- User sets forward to web responder.

- The caller dials directly into the web responder. The forward will work as expected.

CNmsUaSessionStateMsgInfoTest(20230130154940025425)('ByCaller','exist') - MsgInfo

<ByCaller> exist

LookupResponder(sip:ByCaller@Forward)

- Found mode=(forward_call)

LookupNextResponder case(1)

Translate

from

<Forward@>

by (ByCaller)(Forward)

to (sip:ByCaller@Forward)(sip:ByCaller@Forward)Gather

The <Gather> action gathers the given number of DTMF digits and posts back to the given action URL, optionally playing the given .wav file.

In this example, it gathers 3 digits and posts back the relative URL "handle-account-number.php?Digits=555":

| Gather Attribute | Description |

|---|---|

| numDigits | This is the number of digits to gather (such as '1') (default=20) |

| Digits | The verb posts back to the given action URL with the gathered digits (such as 123). |

Play

The <Play> action plays the given .wav file and posts back (returns control) to the given action URL (must be in WAV format).

In this example, it plays the WAV file and then posts back to continue .php. To end the call, omit the action parameter:

Supported Verbs

Starting in v44, the following verbs have either been added or given enhanced functionality. No functionality has been removed from verbs. This release is a feature enhancement; your existing verbs will not break.

A Voice Services integration is required for these enhancements to work as expected. Deepgram is the recommended vendor in order to utilize all available voice attributes.

Response

The <Response> verb is designed to enhance the efficiency and structure of XML responses. This verb simplifies call control by allowing multiple verbs to be encapsulated within a single <Response> element, eliminating the need for multiple requests to perform multiple sequential actions.

Previously, executing multiple verbs required individual requests. With the introduction of the <Response> verb, developers can streamline call flows by listing multiple verbs under a single request. As the call progresses, the Core Module will sequentially move through the verbs listed within the <Response> element, executing each in the specified order. Call control will move to the first action listed, or if it reaches the end, then it will disconnect the call since the <Response> is finished.

Here is an early exit example. The third <say> won't execute because the call flow will be transferred to "next.php" specified by the action at the second <Say>.

Echo

The <Echo> verb allows for real-time audio feedback, enabling immediate playback of user input to facilitate testing. For instance, it might be used to immediately return received audio or input bamustck to the sender. This could be used for testing or feedback purposes within a voice application. If a user says something, the system could use "Echo" to repeat the user's words. If the timeout is not configured, then the "echo" will continue until the user hangs up.

| Echo Attribute | Description |

|---|---|

| timeout | For an "Echo" verb, this attribute controls how many seconds the system will keep echoing the media back to the caller. It defaults to 0, which means unlimited, and the caller will have to drop the call. Timeout is configured in seconds ("5" means 5 seconds). |

Say

The <Say> verb instructs the system to convert text into speech during a phone call, so that the caller hears the text as spoken words. It uses TTS to convert text to audio sentences.

This verb relies on the API and the Voice Services integration to convert the sentence to audio for playback.

| Say Attributes | Description |

|---|---|

| voice | This attribute controls the voice used for a "Say" verb. It accepts either a gender (male, female), a voice name, or voice id. Be careful using a voice ID if you also set language. If you choose a voice ID AND language that do not match, then the system will default back to the default voice for your domain. |

| language | BCP-47 language code. Like en-US, pt-BR, fr-CA. |

Gather

The <Gather> verb already existed, but it can now also collect speech transcripts via voice (along with pressed digits).

On a technical level, this verb instructs the system to collect DTMF, speech, or both. If DTMF is specified and DTMF is collected, a request will be sent with the “Digits” param back. If speech is specified, it is collected through speech_cmd and a request is sent back with the transcript in “SpeechResult”.

<Gather> can contain nested verbs like <Say> or <Play> that can execute while the system is gathering speech or DTMF.

It relies on the API and the Voice Services integration to convert the sentence to audio for playback. We recommend that you use Deepgram as your default vendor to utilize all of the available voice attributes.

| Gather Attributes | Description |

|---|---|

| numDigits | Max number of DTMF digits expected to collect. |

| input | Specify input type. Add "speech" and/or "dtmf" to collect an audio transcript and/or a sequence of DTMF digits. |

| hints | Provide hints of possible words, that are expected to be captured in the transcript. This attribute is especially useful for a directory; providing a list of expected last names will increase accuracy. |

| timeout | DTMF input timeout |

| digitEndTimeout | Sets the maximum time interval between successive DTMF digit inputs |

| language | Language code used on speech collection. |

Hangup

The <Hangup> verb hangs up the call. You can't nest any verbs within it; it can only be nested within a <Response>.

Stream

The <Stream> verb bidirectionally streams media in & out via a WebSocket server in near real-time. Developers can both intercept and inject live audio, making it invaluable for applications requiring real-time audio processing, such as AI-driven analysis and interactive voice response systems.

Stop a Stream

The application can send a stop event to close the stream through the WebSocket. Upon receiving a "stop" event, the <Stream> will stop transmitting. If it is a Sync stream, then it will exit and proceed to the next Web Responder action.

There are two ways to start a Stream: asynchronous (listen only) & synchronous bi-directional (transmit & listen).

Asynchronous Stream

When used within a "Start" verb, "Stream" enables asynchronous audio streaming. It's NOT bidirectional; it's only in one direction. This allows the call to proceed while audio is being transmitted. Asynchronous audio is streamed to the WebSocket URL.

In the following example, audio is streamed to wss://example.com/audio while the call continues with the next instruction, preventing call interruption. If no further instructions are provided, the call may be disconnected; therefore, it is advisable to include subsequent Web differentResponder instructions to continue the call session.

Stopping an Asynchronous Stream

If a unique name was used to start the asynchronous stream, then the stream can be stopped at any time by providing its name within a "Stop" verb, like so:

Synchronous Bi-directional Streaming

For applications requiring synchronous bi-directional streaming where you might need to send audio back to the call or interact with the caller based on the streamed audio, you should use the "Stream" verb within the "Connect" verb. This facilitates a two-way audio stream between the call and your application, enabling interactive scenarios.

| Stream Attributes | Description |

|---|---|

| url | The WebSocket URL where the audio stream will be sent. Ensure this URL uses a secure (wss) protocol and can handle WebSocket connections. |

| track |

This specifies which audio track to stream. There are 3 possible values: inbound (incoming audio), outbound (outgoing audio), and both (both tracks). -inbound: this value indicates that only the audio coming into the Core Module. So from the external party (e.g., the caller's voice) is included in the stream. Use this setting if you are only interested in analyzing or processing what the caller says. -outbound: this value indicates that only the audio being sent from the Core Module to the external party (e.g., the agent's voice) is included in the stream. Select this option if your focus is on what the agent or automated system says to the caller. - both: this setting streams both inbound and outbound audio tracks. It is useful for full conversation analysis, such as enabling applications to process and analyze the entire dialog between the caller and the agent or automated system. |

| name | An optional identifier for the stream, useful for distinguishing between multiple streams in your application. |

Custom Parameters

Custom parameters can be added to the "Stream" verb to pass additional information to the WebSocket server. These parameters are sent with the "start" WebSocket event and can be used to provide context or session-specific data.

In this example, customerID and callType are passed along with the audio stream, allowing the server to tailor the processing based on these parameters. These parameters allow for the transmission of contextual information or session-specific data to the receiving application, enhancing the call's processing and analysis.`

| Parameter Attributes | Description |

|---|---|

| name | The parameter's name, acts as the key in the key-value pair sent to the endpoint. |

| value | The parameter's value, acts as the value in the key-value pair. |

| delete |

Life Cycle of a <Stream> Verb

In the life cycle of a "Stream", several types of events are The parameter's name acts as the key in the key-value pair sent to the endpoint.represented through WebSocket messages: Connected, Start, Media, and Stop. Each message sent over the WebSocket is a JSON string. The event property within each JSON object identifies the type of event occurring.

Connected Message

The Connected message is the first to be sent once a WebSocket connection has been established. Its attributes are:

- event: a string value "connected" indicating the establishment of the connection

- version: indicates the semantic version of the protocol

{

"event": "connected",

"version": "1.0.0"

}Start Message

Sent immediately after the Connected message, the Start message contains essential information about the Stream and is dispatched only once. Its attributes are:

- event: a string value "start"

- sequenceNumber: tracks the order of message delivery, starting with "1"

- start: an object containing Stream metadata such as stream_id, call_id, expected tracks, customParameters, and mediaFormat.

{

"event": "start",

"sequenceNumber": "1",

"start": {

"stream_id": "2d9be7d7-4a66-4667-b2e1-cf3fa4e66306",

"call_id": "vmj74pd5nmm9jlukvbfg",

"tracks": [

"inbound",

"outbound"

],

"customParameters": {

"client_id": "123123123",

"status": "1"

},

"mediaFormat": {

"encoding": "audio/x-mulaw",

"sampleRate": 8000,

"channels": 1

}

}

}Media Message

A Media message encapsulates the raw audio data within the stream. Its attributes are:

event: a string value "media"

sequenceNumber: increments with each new message

media: contains the audio payload and additional metadata like track, chunk and timestamp

chunk: this attribute signifies the sequence of the audio packets being sent on that track. The first message will start with chunk "1" and increment with each subsequent message. This sequential numbering helps ensure that audio frames are processed in the correct order, maintaining the integrity of the conversation.

timestamp: this represents the presentation timestamp of each audio chunk relative to the start of the stream, in milliseconds.

{

"event": "media",

"sequenceNumber": "3",

"media": {

"track": "outbound",

"chunk": "1",

"payload": "base64AudioData",

"timestamp": "1708388604"

}

}Stop Message

A Stop message indicates the Stream's termination or the call's end. Its attributes are:

event: a string value "stop"

sequenceNumber: increments with each new message

stop: contains metadata about the Stream session

bytesSent: this indicates the total amount of data transmitted during the stream for each enabled track.

duration: This reflects the total duration of the audio stream for each track, typically measured in seconds.

{

"event": "stop",

"sequenceNumber": "416",

"stop": {

"tracks": [

{

"type": "inbound",

"bytesSent": 66240,

"duration": 8

},

{

"type": "outbound",

"bytesSent": 99114,

"duration": 10

}

],

"call_id": "24j83iflgottdokd39vt",

"stream_id": "6ccf2088-6755-4861-a162-3c6c30d6ad07"

}

}Wait

The <Wait> verb can be used to include configurable pauses within a call flow. It instructs the system to pause for a specified duration before proceeding with the next instruction in the sequence. This can be particularly useful for creating delays within voice interactions, such as spacing out verbal prompts to make them easier for the user to understand. If a wait timeout is not configured, then the system will default to waiting 1 second.

| Wait Attribute | Description |

|---|---|

| timeout | For a "Wait" verb, this attribute controls for how many seconds the Core Module will wait before executing the next step. It defaults to 1, which means it will wait 1 second. |

Example Use Cases

| Verb(s) | Instructions |

|---|---|

| echo (without a timeout) | <Echo/> |

| echo (with a timeout) | <Echo timeout=”15”></Echo> |

| wait (without a timeout) | <Wait/> |

| wait (with a timeout) | <Wait timeout=”5”></Wait> |

| wait (with a timeout) within a response |

<Response> <Say>Hold on, we are connecting your call.</Say> <Wait timeout="5"/> <Say>Thank you for waiting.</Say> </Response> |

| echo & wait together within a response |

<Response> <Say> Wait for 5 seconds and test your microphone and speaker by talking to yourself after the tone</Say> <Wait timeout='5'></Wait> <Echo timeout='15'></Echo> </Response> |

| say | <Say>Hello, this is a simple text-to-speech message.</Say> |

| gather speech & say in the appropriate language |

<Gather input='dtmf speech' action='process_action.php’> <Say language=‘en-US’ voice=‘amy’> Hi, how can I help you today ? </Say> </Gather> |

| gather DTMF digits and send to “ivr.php” | <Gather numDigits="1" action="ivr.php"></Gather> |

| gather 10 digits and send to “account.php”, play an audio that requests the account number |

<Gather input="dtmf" numDigits="10" action="account.php"> <Play>https://myhost.com/enter-your-account-number.wav</Play> </Gather> |

| gather 1 digit DTMF and use TTS |

<Gather numDigits="1" action="next.php"> <Say voice="female">Enter one to continue</Say> </Gather> |

| play greeting, gather 1 digit DTMF, and say audio |

<Response> <Play>Welcome to CompanyName</Play> <Gather numDigits="1" action="ivr.php"> <Say voice="female">Enter 1 or say sales to connect to sales, enter 2 or say support to connect to support</Say> </Gather> </Response> |

| gather speech, say a difference language |

<Gather input="speech" hints="Thiago Vicente" language="pt-BR" action="ivr.php"> <Say voice="male" language="pt-BR">Olá, com quem você gostaria de falar?</Say> </Gather> |

| gather speech, use a custom format, say audio |

<Gather input="speech" model="nova-2-general" numDigits="1" action="http://myhost.com/app/stashvmail.php"> <Say voice="female">Hello, thanks for calling, leave a message now</Say> </Gather> |

| gather speech, disable smartFormat, enable numerals |

<Gather input="speech" smartFomart="no" numerals="yes"> <Say voice="female">Hello, Say some numbers now</Say> </Gather> |

| say audio in the default language and voice |

<Response> <Say>This will play using the default voice and language associated with the Web Responder system user/domain</Say> </Response> |

| say audio in a male or female voice |

<Response> <Say voice="male">Hello there! How are you?</Say> <Say voice="female">Hi! Nice to meet you</Say> </Response> |

| say audio in a specified language |

<Response> <Say language="en-AU">G'day! How's it going?</Say> </Response> |

| say audio in a specified language and gendered voice |

<Response> <Say voice="male" language="es-ES">¡Hola! ¿Cómo estás?</Say> </Response> |

| say audio in a specified language and voice ID |

<Response> <Say voice="pt-BR-Wavenet-C" language="pt-BR">Olá! Como você está?</Say> </Response> |

| asynchronous audio stream |

<Response> <Start> <Stream url="wss://example.com/audio"> </Stream> </Start> <Say>This will execute after connecting the stream</Say> </Response> |

| stop a stream |

<Response> <Stop> <Stream name="mystream" /> </Stop> </Response> |

| Synchronous Bi-directional Streaming |

<Connect> <Stream url="wss://example.com/audio"> </Stream> </Connect> |

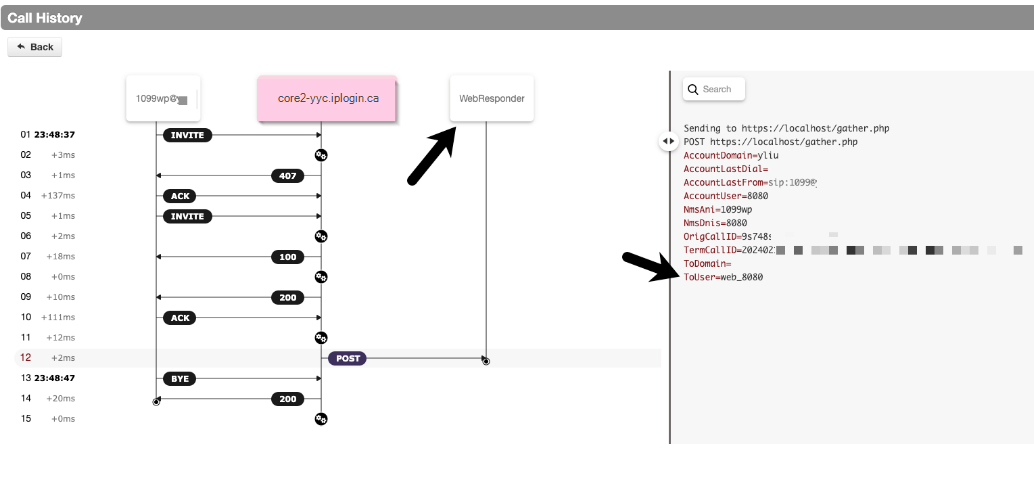

Web Responders in a Call Trace

Starting in v44, Web Responders will now show as a call participant in the call trace.

Here is an example testing scenario:

- Contact Support to create a dial translation for the Web Responder using the application "To Web".

- Use the "destination" of the above dial translation and set it as a user's forwarding rule.

- Call the user. The following trace should show the Web Responder as the call participant ("POST").

Web Responder Enhanced Security

A new system property, WebRespSecret, defines a global secret key used for signing all Web Responder requests. This property is not set by default, meaning no signature is appended to the request.

There is also a new token, HttpSecret, which facilitates the customization of the signing secret on a per-request basis via the dial-rule parameter. This allows for flexibility in scenarios where different Web Responders need different secrets.